In the latest annual Quacquarelli Symonds (QS) World University Rankings report, the University of Toronto climbed two spots, from 19 to 17. Some senior university administrators have expressed uncertainty about the accuracy and comprehensiveness of university ranking systems. The Varsity asked a number of experts to discuss their take on the metrics and methodologies behind two of the most prominent rankings: QS World University Rankings, and Times Higher Education World University Rankings (THE).

In an interview with The Varsity, U of T president David Naylor cautioned that while U of T’s strength in ranking tables is encouraging, students should take these rankings with a grain of salt: “It’s obviously very hard to boil institutions as complex as universities down to a single number,” he said. McGill University’s principal, Suzanne Fortier, expressed similar concerns. “These aren’t very accurate scientific studies, so the margin of error is big,” she said.

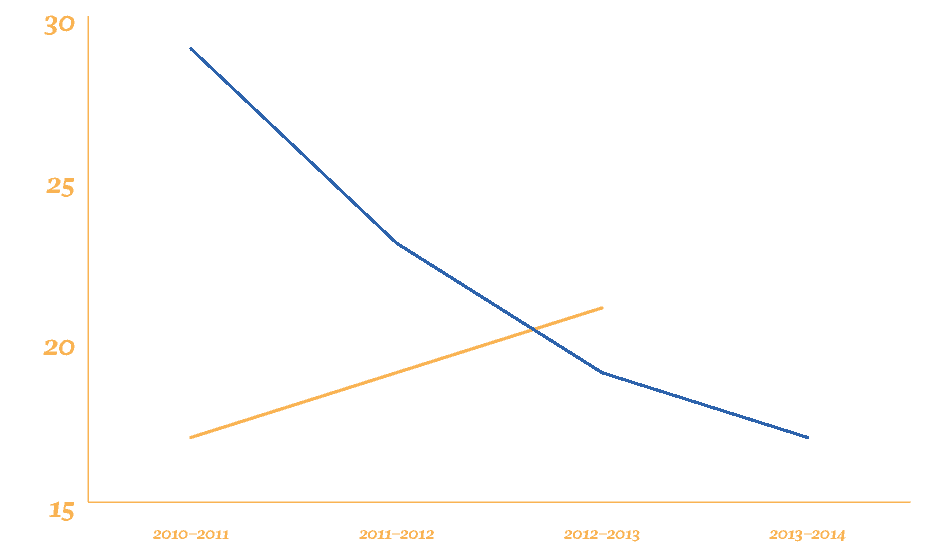

While U of T has steadily climbed QS tables for the past four years, the opposite trend is apparent in THE tables. “This doesn’t reflect the fact that we’re getting better or worse, it reflects the fact that two different ranking agencies use two different sets of measures,” said Naylor. He stated that inconsistency across different ranking schemes, due to their varying metrics, is part of what makes them difficult to interpret. QS and THE collaborated until 2010, when THE made a dramatic change in its ranking scheme, choosing to partner instead with Thomson Reuters. “We moved from six weak indicators to 13 more balanced and comprehensive indicators,” Phil Baty, THE editor at large and rankings editor, told The Varsity.

Measures of Teaching and Learning Environment

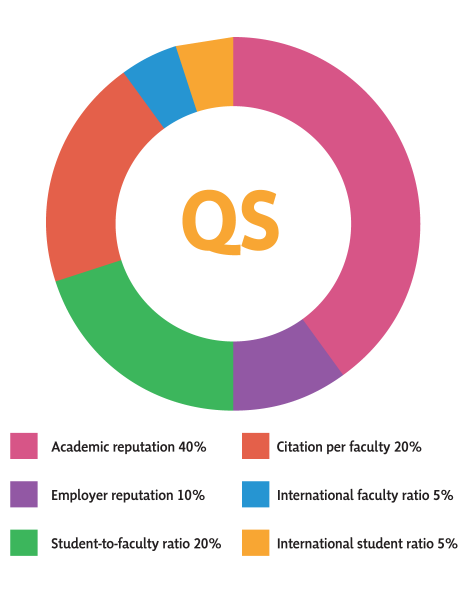

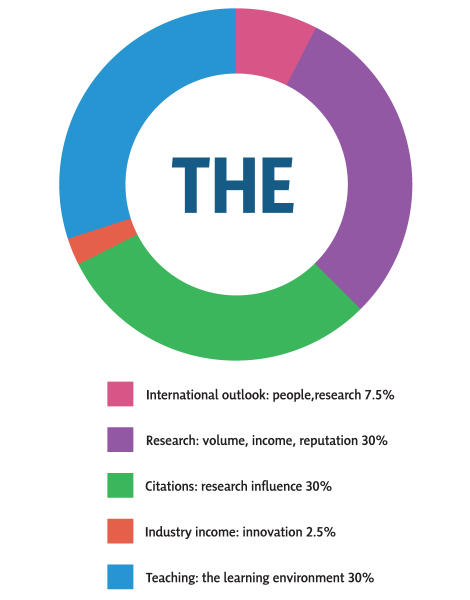

Some ranking systems use the ratio of students to faculty members as a proxy for measuring the quality of a university’s teaching and learning environment. Naylor expressed suspicion about this proxy, stating that longer-term measures, such as the degree to which students value their university education several years after graduating, are a far better measure of teaching excellence than student–faculty ratio. This ratio accounts for 20 per cent of the overall score in QS, and 4.5 per cent of the overall score in THE. The latter system uses a number of other measures to make up the total 30 per cent weight of this category, including an academic reputation survey and the ratio of doctorate to bachelor’s degrees.

Ben Sowter, head of research at QS, acknowledged that the proxy is not ideal: “Teaching quality, from our viewpoint, is about more than having a charismatic lecturer. It is about the environment, and a key part of that is access to academic support. I think student–faculty ratio is reasonable proxy measure for this. That is not to say that I am satisfied with it though. We would consider other measures on the provision that they are globally available, sufficiently discerning, and not too responsive to external influences,” he said.

Baty expressed that in his view, a heterogeneous approach to measuring teaching quality is essential. “A staff–faculty ratio is an exceptionally crude instrument for judging teaching quality — does the number of waiters in a restaurant tell you how good the food is? It fails to take into account a whole range of issues, like the balance between intimate personal tutorials and large lectures, major variations in student-faculty ratios by subject area, and the data is exceptionally easy to manipulate depending on how you count your faculty,” he said.

Co-dependent measures can generate a Catch-22

The ratio of citations per faculty member accounts for 20 per cent of the overall score in QS, and 30 per cent of the overall score in THE. This measure is meant to capture the influence of a university’s research output.

Due to U of T’s high number of undergraduates, there is a large portion of faculty members whose primary activity is teaching, rather than research. At a Governing Council meeting in June, Naylor stated that the way U of T counts its faculty may put it in a Catch-22 situation. “If we don’t count all the faculty, we have a high student-faculty ratio, which is taken as a proxy for educational excellence in some of the ranking systems. If we count all of them, then publications per faculty member fall dramatically, and we lose again,” Naylor said.

Sowter stated that QS is aware of this situation, and expressed that there is an ongoing effort to refine the system’s data collection approach. However, he stated that there are significant limitations in the availability of data that are specific enough to properly refine this metric, especially in developing countries. Baty stated that this scenario is another reason that the THE system does not heavily weight student-faculty ratio, and emphasized the importance of a balanced range of indicators.

Concerns about Canada’s egalitarian funding model

Baty foreshadowed a future drop in rankings for Canadian universities, citing an overly egalitarian approach to funding as the main factor. He claims that while the Canadian government generally allocates funding to universities equally, other countries have been investing heavily into specific universities in order to gain a competitive edge in rankings.

Naylor echoed Baty’s concerns in his presentation about university rankings to the Governing Council in June. He argued that investing into research flagship institutions is critical for catalyzing research and innovation, as well as for attracting both domestic and international talent. “All over the world, you see strategic investments being made by other jurisdictions to ensure that they have a set of institutions that are in that category. So far, what’s been happening in Canada is not aligned in any way, shape, or form in that direction,” he said. For example, Naylor stated that U of T loses millions of dollars each year by paying for the cost of housing federal research grants. He argued that the federal government does not account for these costs, and that the resulting financial deficit negatively affects undergraduate educational quality. “While it’s fantastic that QS says we’re 17th in the world, and we can all have a little brief victory dance about that, or not, depending on our frame of reference, we are still swimming against the tide in this country. And frankly, it’s shameful,” he said.

Sowter countered these views. “With Canada’s population spread over such a vast area, it would seem counter-intuitive to put all the focus on one or two universities”, he said. “From the standpoint of our ranking, Canada looks highly competitive and appears to be holding its ground much more robustly than the US. So I suppose I’ll have to disagree, it seems like Canada has a funding model that works for Canada.” He added that the achievements of universities are not solely a product of the efforts of the universities themselves, but also of their academic, economic, and social environments.

Rankings and university funding priorities

Baty elaborated on the importance of university rankings in a broader context. He stressed that THE rankings provide useful data for both universities and governments. “They are trusted by governments as a national geo-political indicator, and are also used by university managers to help set strategy. The data can help them [universities] better understand where they are falling short, and could influence strategic decisions,” he said.

Sowter argued that ranking results should only be used in conjunction with other metrics to provide a comprehensive scope of where a university stands, and where it needs to go. He also stressed that university rankings should not bear heavy weight on university policy. “I hope they change nothing based on rankings. At least not in isolation. Universities need to remain true to their reason for being and not subvert their identity or mission priorities in pursuit of a ranking,” he said.

Naylor addressed this by emphasizing that high rankings are a product of effective administration, not the other way around. “We’re on an academic mission, and the rankings are a happy side effect of trying to stay on mission. To actually change a program or an academic decision to deal with a ranking seems incomprehensible to me. If someone’s doing it, they’re either extremely rich as an institution, or very silly,” he said.

With files from The McGill Tribune.