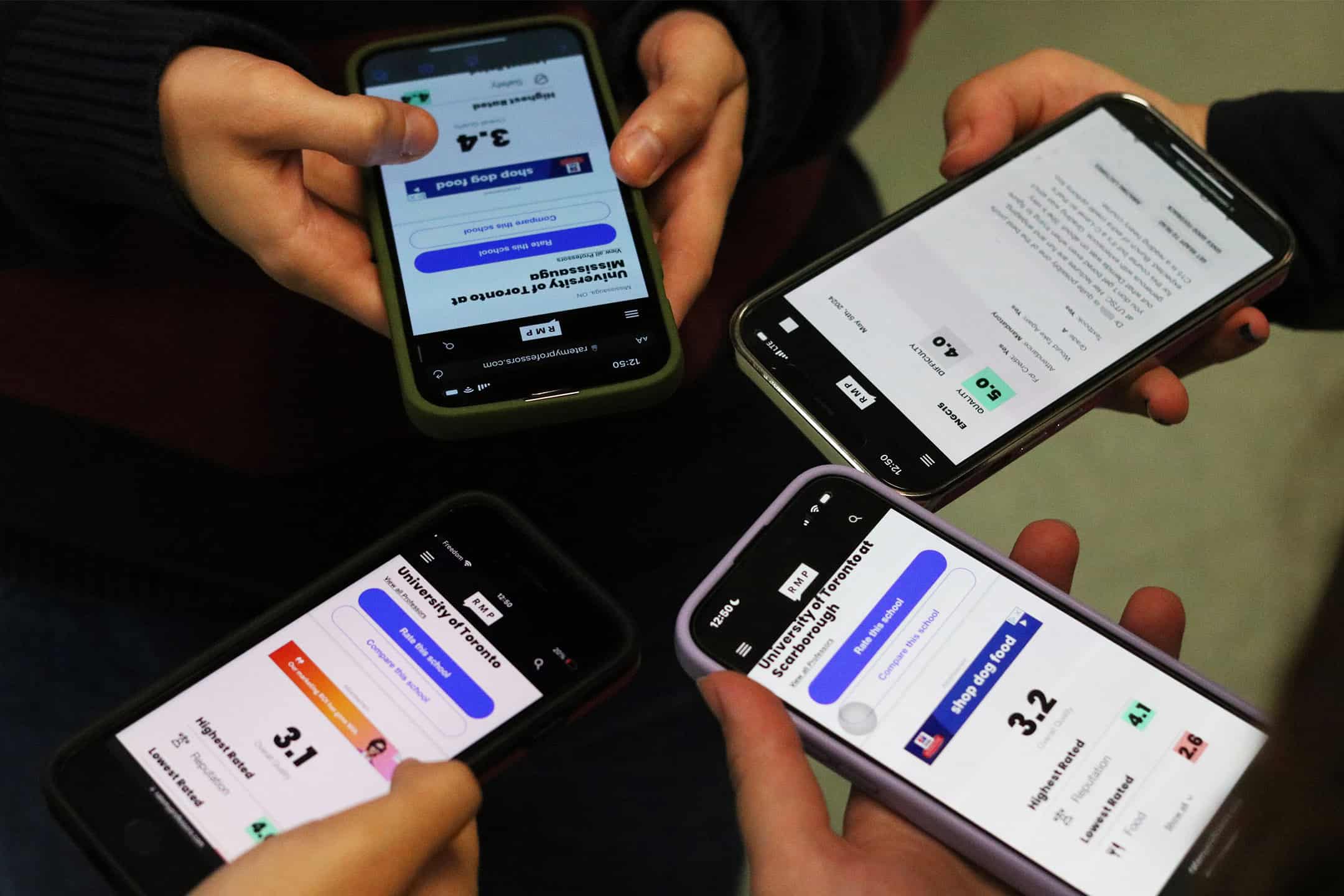

As Rate My Professors (RMP) establishes itself as the go-to site for researching and rating professors, it’s important to be mindful of its role in shaping our perceptions of them.

According to the website’s ‘About’ page, RMP allows students “to figure out who’s a great professor and who’s one you might want to avoid.” However, I believe this approach is the wrong way to approach the website. We should not let what I see as a flawed system hold so much say in determining whether or not we ‘avoid’ certain professors.

All’s fair in 5.0’s and 1.0’s — or is it?

“I am only giving a 5 to make up for my previous rating of 1 — I believe the average 3 is a much more accurate depiction of professor [redacted]’s teaching standard” reads an anonymous comment on a U of T professor’s RMP page. While I commend the student for adjusting their score, I believe that this comment illustrates how polarizing the site’s numerical rating system can be.

As RMP relies on voluntary self-reporting, a 2021 study on RMP’s data from Texas State University highlights that this type of reporting is often biased and prone to misrepresentation. It tends to overrepresent individuals with strong opinions — particularly negative ones. Consequently, the discourse around the professor and course becomes skewed by impassioned voices that dominate the discourse.

This aligns with my experiences on the site, where I frequently encounter a volley of alternating 1.0 and 5.0 ratings for the same professor. I’m often left wondering what truly makes a professor deserving of such extreme scores.

In an interview with The Varsity, fourth-year economics student Dean Locke said that RMP ratings are “generally pretty accurate.” However, he contemplates that “using the scores of other students to determine how much I’d like a professor almost feels like treating them like an Amazon product.”

Locke makes a fair point. Numerical rating systems can be quite reductive, especially when evaluating a person. While they provide an at-a-glance indicator especially useful during the hectic enrollment process — I’ve been there — I believe they ultimately dissuade students from gaining a deeper understanding of a professor’s teaching style. It may prevent students from giving some professors a fair shot.

What’s in a review?

Lucy Borbash — a fourth-year mathematics major — hesitates to use the site. As Borbash stated in an interview with The Varsity, they prefer to form their own opinions without being influenced by reviews that might deter them from trying things out for themself. Upon visiting a “beloved” professor’s page, they were surprised by his reviews, including one that describes him as “unreasonable and oblivious to student complaints.”

Borbash said, “In my experience, he was actually very accommodating… you could email him and ask about anything, and he [would] periodically check in with us… But if I had read beforehand that he was unsupportive toward his students, I might not [have] ask[ed] for [help].”

I agree with Borbash. If you find yourself with no choice but to take a course with a professor rated 1.0, focusing solely on the negative reviews could do more harm than good. A 2007 study published in the Assessment & Evaluation in Higher Education Journal found that exposure to negative RMP ratings led to negative expectations and, consequently, negative experiences for students.

Furthermore, a 2009 experiment published in the Journal of Computer-Mediated Communication found that negative expectations generated by simulated RMP feedback resulted in approximately 10 per cent lower quiz scores on lecture material compared to students who read simulated positive comments. The study also indicated that the positive group scored higher than students who were not exposed to any RMP ratings at all. If the ratings are skewed and affecting academic performances, should we simply avoid the RMP website altogether?

While I don’t believe the site’s ratings and reviews should be the determining factor in choosing courses, I think RMP can be incredibly useful for gauging what to expect in a class. It’s important to be mindful of how we wield the site. We should disregard extreme comments and focus on the information about how the class is conducted. Additionally, it’s important to discern between subjective and objective reviews.

Prioritizing comments such as “This professor requires weekly quizzes,” over one’s like “This professor is a boring lecturer,” will be more productive in the long run — both for managing your expectations and for setting yourself up for success in the class.

Athen Go is a fourth-year student studying architecture, English, and visual studies. She is editor-in-chief of Goose Fiction.