We have reached a point where a life without internet access is simply inconceivable for many, and this is understandable. For the first time in human history, we are able to communicate instantaneously with almost anyone in any part of the world. As well, there is a vast repository of free information that lies within the reach of anyone who seeks it.

But the workings of the internet seem mysterious and otherworldly. What we call the internet is actually a whole bunch of protocols, defined as a set of rules that facilitate data transfer reliably among machines.

The predominant protocol that we see in almost every web address is the HyperText Transfer Protocol, commonly known as HTTP. Created by Tim Berners-Lee in 1989, it specifies rules for handling hypertext, which is structured text that uses logical hyperlinks between nodes containing text.

Though HTTP has served us well for the most part, the rapid expansion of the internet has been unprecedented, and it continues to expand at a frenetic pace. As a result, there are some problems that have revealed themselves over the years.

The predominant issue with HTTP is that it facilitates the transfer of a document, or group thereof, from a single computer housed in one location — the server — to another computer that could be in a completely different location — the client. This results in the slow and expensive internet that many currently experience.

Further, this tends to be unreliable because if a single link in an HTTP transfer cuts out, the entire connection is severed. This begs the question: can we do better?

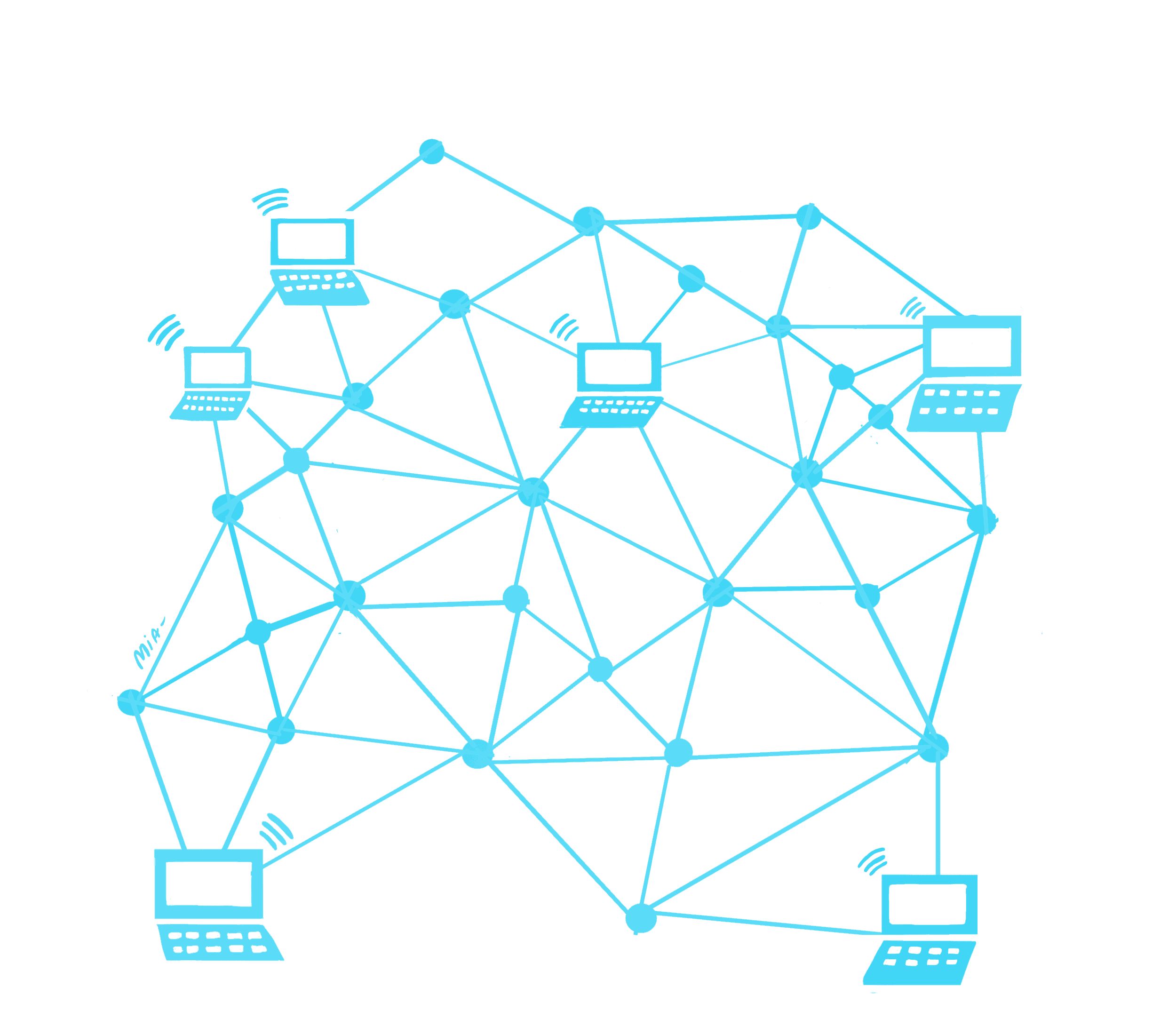

Fortunately, this question is music to the computer scientist’s ears, and the proposed solution is the InterPlanetary File System (IPFS). Instead of delivering a document directly from server to client each time, this protocol allows a client trying to access the document to stitch together shreds of that document from other clients in the vicinity who have already received or are in the process of receiving it from the server.

This might sound familiar because this is exactly how torrents and other peer-to-peer networks work. When someone tries to download a torrent file from a host, they are actually receiving different pieces of the file from ‘peers’ who are actively ‘seeding,’ or uploading, the file even though they haven’t completely downloaded it yet.

Even the user downloading the document is part of this process, and the more peers there are, the faster the download happens. Their combined upload speed determines how quickly the pieces of the document can be up for grabs, and how quickly the client puts these pieces together in their machine depends on their download speed.

This occurs in any torrent client while downloading — hopefully legal — material. The peer list shows their IP address, location, percentage of the file downloaded, and upload speed among other information.

Another massive advantage of the IPFS is that any media on it is completely distributed. Instead of a link referring to the physical location of a document as in HTTP, an IPFS link will refer to the ‘hash’ of the file’s contents. Think of a hash as a code language: for some sensible input – be it a text file, video, or anything else – a hash function will assign to it a unique sequence of characters.

If someone tries to access a file, IPFS will essentially ask the network, ‘Does anyone have a file corresponding to this hash?,’ and any clients that happen to possess it will reply. Following this, a connection will be established between the device asking for the document and all the latter clients.

This is extremely powerful for another reason: any document on the IPFS network will permanently be on the internet. This can be used to avoid active censorship by any government or authoritarian body, and in fact, it has already been used for this purpose.

When the recent Catalan referendum was organized, the Spanish government actively tried to clamp down on any websites or online content that the proponents of the referendum possessed. As a result, supporters turned to the IPFS to host a website that anyone can access and that no single authority could block. In order to do so, the government would have to block internet usage for all peers on the network and effectively deny internet access for everyone.

This revolutionary form of file transfer is only nascent as of recent. It is imperative that its impact be recognized as an alternative to the sluggish, centralized internet fraught with annoying ads and malicious distributed denial-of-service attacks that will eventually bring about its own undoing.