Sometimes, I feel like I’m standing on the threshold of the future, as though I can see it streaming in like light from under the door. Attending the Mersivity Symposium last December was one such moment.

Mersivity Symposium unites pioneering in technology with sustainability. The event hosted a diverse group of participants, including researchers, authors, economists, and innovators from esteemed institutions — such as U of T, the Massachusetts Institute of Technology (MIT), and the Institute of Electrical and Electronics Engineers — focusing on the integration of humanistic artificial intelligence (AI) and advanced technologies.

In his opening remarks for the event, U of T President Meric Gertler captured the essence of the symposium: “The ideas raised in this symposium remind us of the vital importance of harnessing technology in the service of people and the planet.”

A highlight for me was the presentation by two young researchers from MIT, Akarsh Aurora and Akash Anand. They invited us to envision a future where thoughts could be directly translated into words.

Imagine a world where you wouldn’t need a mouse and keyboard to interact with your computer and could simply use your thoughts. You could turn on the lights with just a thought and surf the channels without even having to find the remote, undisturbed from that cozy spot on the couch. You could set an alarm by just thinking about it before you drifted off into sound sleep. From a humanistic standpoint, they emphasized the importance of such a technology to people with speech impediments and to those who have, in some way, lost the ability to communicate.

The talk given by Aurora and Anand helps us pull back the curtain on how such futuristic research might be done.

The electrode implant problem and the alternative

To convert thoughts to words, researchers must first receive the raw data of thoughts. On a physical level, thoughts are primarily electrical signals in the brain. One way to read these signals is to stick an electrode smack-dab in the middle of the brain and observe the electrical signals whizzing by.

Researchers have done this before. However, the problem with such implants is that they interfere with and start to erode the brain tissue around them. After a while, implants also become less effective, which means an implant must be replaced periodically. If you’re anything like the general consumer, you’ll agree that the idea of invasive implants in your brain sounds absolutely odious. You’ll likely never buy that.

The alternative? Non-invasive brain-computer interfaces (BCIs) like the electroencephalogram (EEG). An EEG resembles a delicate cap fitted with numerous wires and small electrodes, placed across the scalp to detect the brain’s electrical activity. You might have encountered it on Stranger Things or medical TV shows. Since it’s non-invasive, EEG is what neuroscientists use to gather data from the brain.

Reconstructing words from EEG signals

For their study, Anand and Aurora recorded the EEG data of participants listening to the first chapter of Alice in Wonderland. Their goal was to reconstruct the words the participants were listening to from only EEG data.

But going from an EEG signal to a word is a herculean task! The EEG picks up a lot of excess ‘noise’ in the data — even blinking generates noise — so Anand and Aurora first had to “clean up” the EEG signals by removing the noise through filters.

To convert brain activity into words, they used a pre-trained AI to generate candidate word sequences representing predictions of the words a person was hearing. An AI-generated word sequence would be considered “correct” if it matches the sequence a participant was actually listening to at that moment of recording.

Anand and Aurora then used a model to generate predicted EEG responses from candidate word sequences. Then, they used a system to score each word sequence based on how much the predicted EEG response from the AI-generated word sequence differed from the true EEG response. This score system allowed them to find the most likely among many AI-generated word sequences.

So far, the result doesn’t have quite the accuracy we would desire, but it is a notable step in the right direction.

The groundbreaking research presented by Aurora and Anand at the Mersivity Symposium serves as a beacon of what the future might hold in the realm of BCIs. Their pursuit of translating neural signals into continuous language reflects the brilliant fusion of neuroscience and advanced technology and exemplifies the symposium’s theme of harnessing technology for the greater good, blending human intellect with artificial intelligence.

Other talks: AI for veganism and the Freehicle

Other talks included a presentation from artist Reid Godshaw, discussing how AI can be used creatively to magnify our artistic voice. He showed a series of gorgeous and moving artworks he created with the help of AI to spread awareness of veganism. Using the digital artwork, he highlighted animals’ similarities to humans and spotlighted the cruelty behind the meat industry.

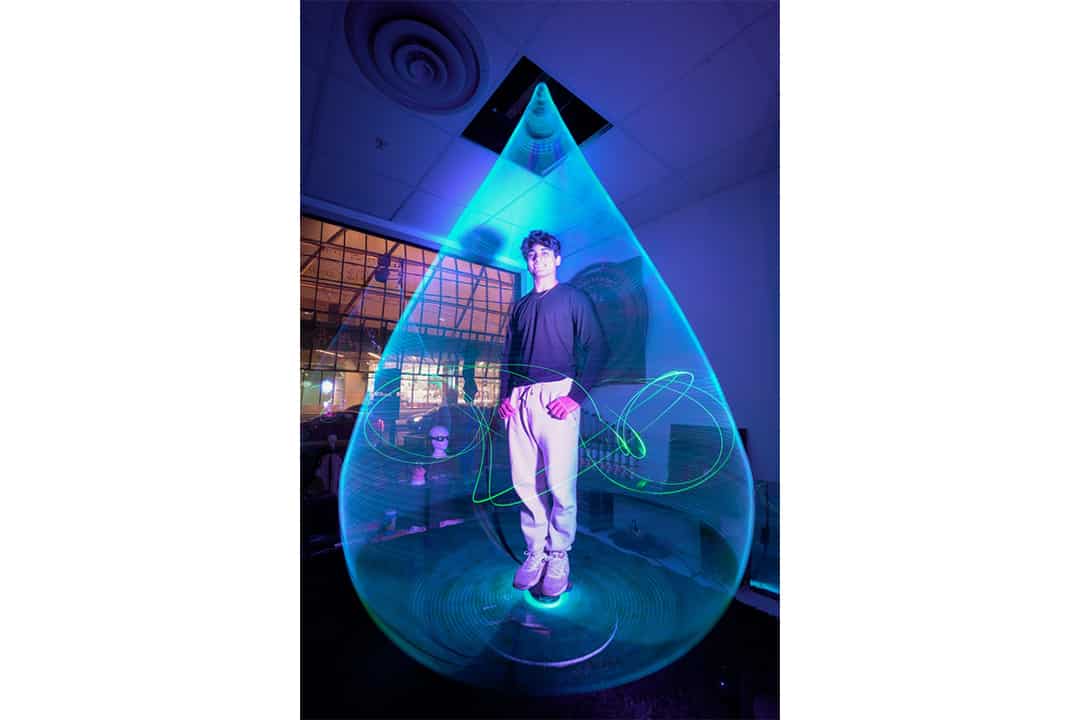

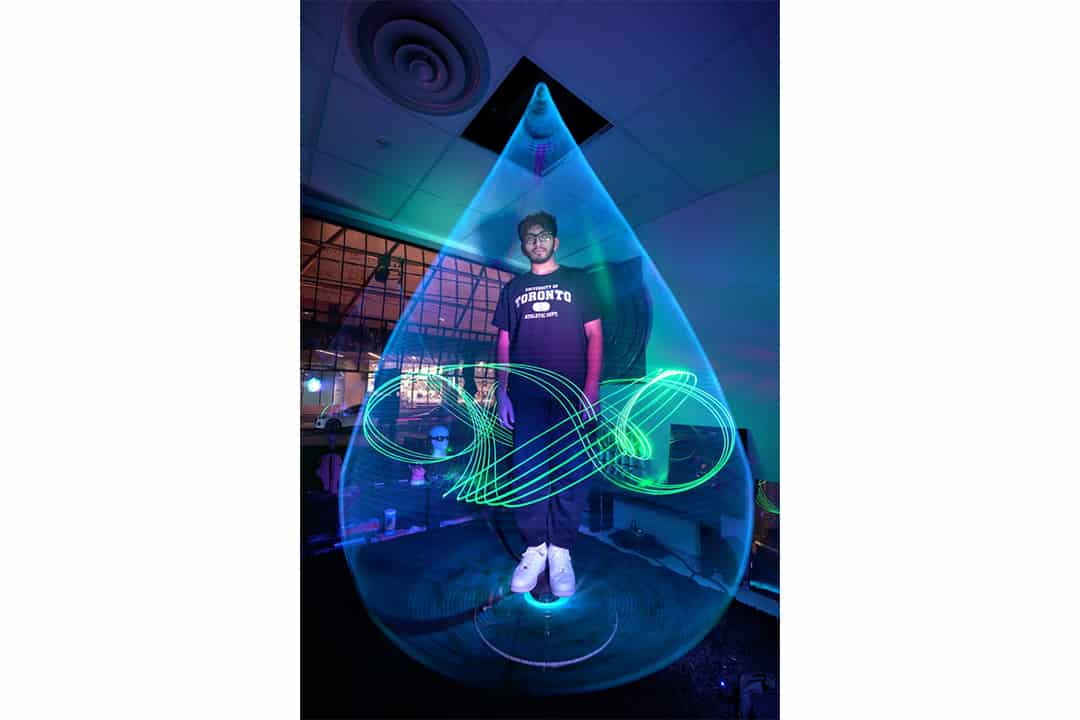

Engineer and U of T Professor Steve Mann presented a conception of a “Freehicle”: a free vehicle, that would have the potential to reconfigure itself to land on land or water, highlighting the importance of accessibility and the improvement in quality of life an accessible means of transport could bring to all, and especially to people with disabilities.

In conclusion, the Mersivity Symposium has showcased innovative research and how technology and humanity coalesce in unprecedented ways. As the symposium drew close, I felt as though the ground had shifted from underneath my feet, on the metaphorical precipice of time that I was standing on. I thought of the popular internet meme that proclaimed: “The future is now, old man.”

No comments to display.